July 20, 2023

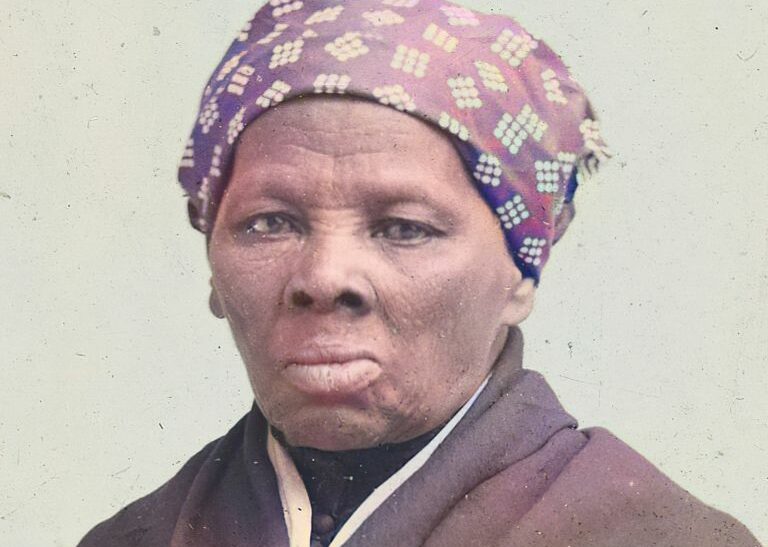

Khan Academy Is On The Wrong Side Of History And Intelligence With Its Harriet Tubman AI Avatar

Khan Academy bills its artificial intelligence tutors as “the future of learning” on its website, but the truth is a little more complicated. What the site does not state upfront is that its service allows learners to select different historical figures such as Genghis Khan, Montezuma, Abigail Adams, and Harriet Tubman. The service is currently not available to all; it is restricted to a few school districts as well as volunteer testers of the product.

Similar to ChatGPT, avatars pull from data available on the internet to create a repository of words in the “vocabulary” of the bot that a user is talking to.

The Washington Post tested the limits of this technology, specifically the avatar of Harriet Tubman, to see if the AI would mimic Tubman’s speech pattern and spirit or if came off as an offensive impression or a regurgitation of Wikipedia information.

According to the article, the tool is designed to assist educators in fostering students’ curiosity of historical figures, but there are limits in how the bot is programmed, resulting in avatars that do not accurately portray the figures they are supposed to represent.

These AI interviews immediately raised questions, not just of the ethics in the nascent field of artificial intelligence, but of the ethics in even conducting such an “interview” in the interest of journalism. Many Black users on Twitter were horrified at the thought of digitally exhuming a venerated icon and ancestor in Harriet Tubman. These concerns seem to be located in the working knowledge that the creators of these apps and bots are not interested in fidelity to the spirits of the dead, because they don’t seem to care much about the living Black people they continually fail to do right by.

Even The Washington Post acknowledges that the bot fails its basic fact-checks, and Khan Academy stresses that the bot is not intended to function as a historical record of events. Why introduce such a technology if it cannot be trusted to even impersonate an up-to-date “version” of historical figures?

https://twitter.com/CiCiAdams_/status/1681310375319584771

UNESCO sets out some basic tenets and recommendations for ethics in the field of artificial intelligence on its website. The organization created the first global standard for ethics in artificial intelligence, which was accepted by 193 countries around the world in 2021.

Their four pillars are Human Rights and Human Dignity, Living in Peace With an Emphasis on Creating a Just Society, Ensuring Diversity and Inclusion, and Environmentalism. Even a cursory glance at these pillars would find Khan Academy’s bot impersonating historical figures who can’t consent to have their likenesses and names used is in flagrant violation of ethics and, some would argue, moral guidelines.

If the dead have dignity, digging them up for what amounts to thought exercises represents a complete disregard for their wishes and a lack of thought about these tenets of ethics. In its discussion of fairness and nondiscrimination, UNESCO writes: “AI actors should promote social justice, fairness, and non-discrimination while taking an inclusive approach to ensure AI’s benefits are accessible to all.”

It sounds like Khan Academy needs to take these words to heart, because at present, it does not exactly seem like social justice, fairness, and accessibility are at the heart of this project. The reactions to this experiment on social media tell that story to the world.

RELATED CONTENT: Redman Wants No Parts Of Artificial Intelligence Says, ‘Don’t Let Technology Ruin Hip-Hop’